Abstract

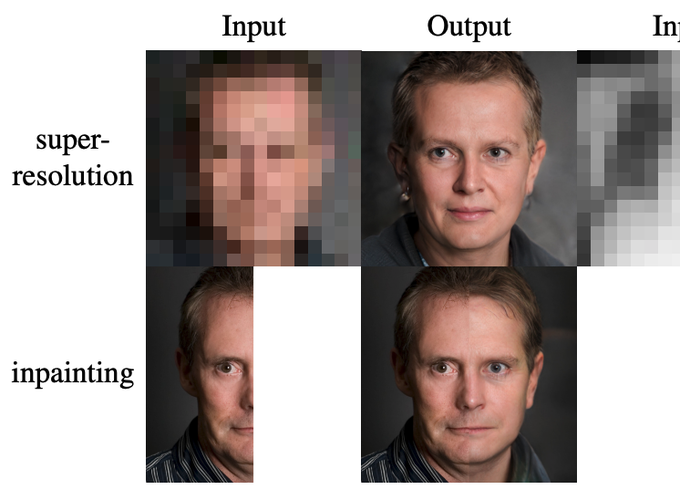

Machine learning models are commonly trained end-to-end and in a supervised setting, using paired (input, output) data. Classical examples include recent super-resolution methods that train on pairs of (low-resolution, high-resolution) images. However, these end-to-end approaches require re-training every time there is a distribution shift in the inputs (e.g., night images vs daylight) or relevant latent variables (e.g., camera blur or hand motion). In this work, we leverage state-of-the-art (SOTA) generative models (here StyleGAN2) for building powerful image priors, which enable application of Bayes’ theorem for many downstream reconstruction tasks. Our method, called Bayesian Reconstruction through Generative Models (BRGM), uses a single pre-trained generator model to solve different image restoration tasks, i.e., super-resolution and in-painting, by combining it with different forward corruption models. We demonstrate BRGM on three large, yet diverse, datasets that enable us to build powerful priors: (i) 60,000 images from the Flick Faces High Quality dataset (ii) 240,000 chest X-rays from MIMIC III and (iii) a combined collection of 5 brain MRI datasets with 7,329 scans. Across all three datasets and without any dataset-specific hyperparameter tuning, our approach yields state-of-the-art performance on super-resolution, particularly at low-resolution levels, as well as inpainting, compared to state-of-the-art methods that are specific to each reconstruction task. We will make our code and pre-trained models available online.